Copilot's code reviews

Code reviews

Love them or hate them, pull request reviews are part of most dev’s lives. Trying to understand the context of the pull request, considering if the code quality is good, does it have bugs, are the test cases covering everything… A good review should consider all of these and more, all while not pissing of your coworker by being too harsh sounding about the code they worked so hard on to push out. In an ideal world this would be a great place for an AI to save some of the valuable time of a developer: the scope is limited, the aim is defined and the valuation of quality is somewhat defined. But in my experience copilot’s code reviews are a lot of hit and miss.

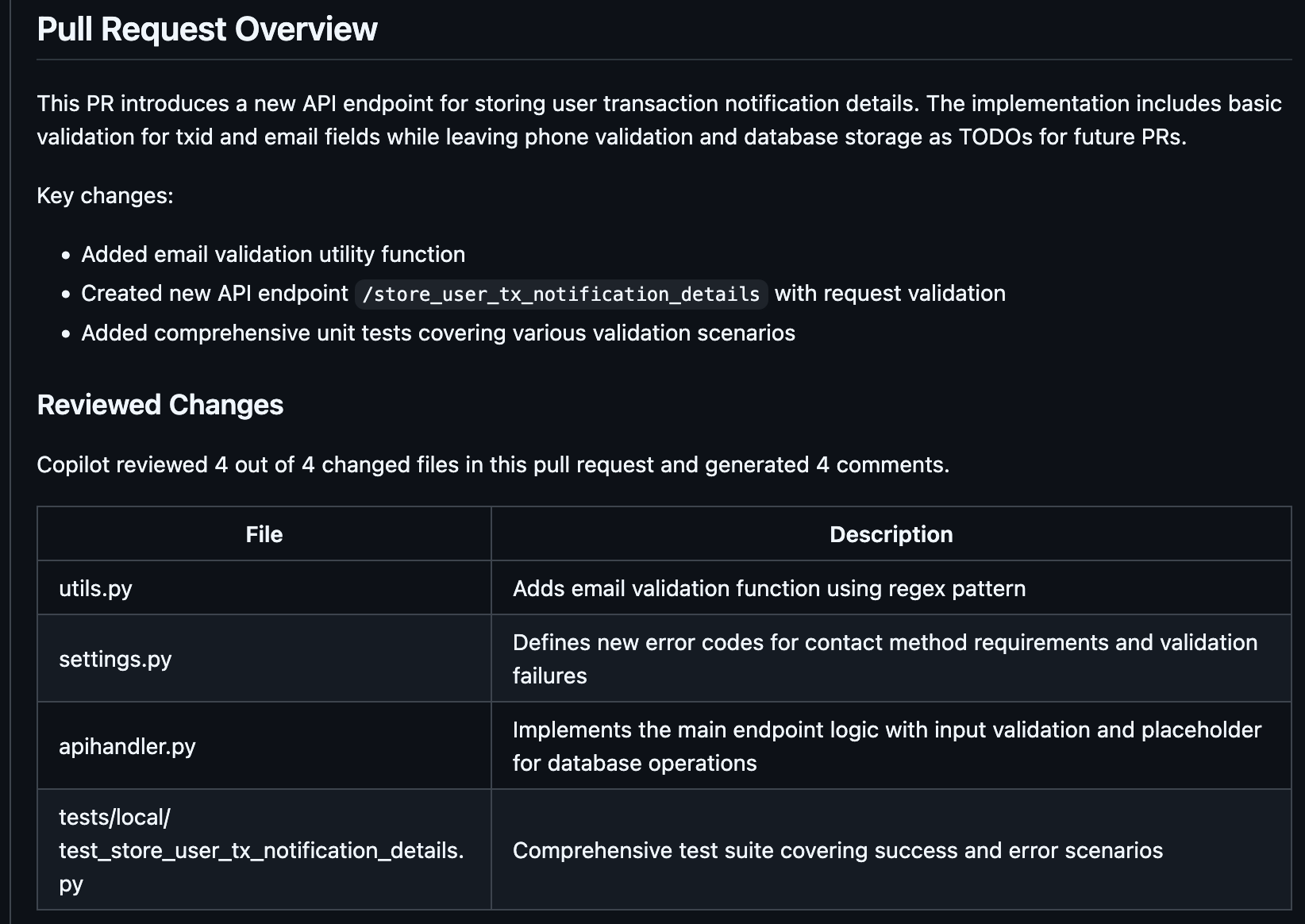

Lets take a relatively small real world PR as an example. The PR was really well written, not too many lines of code and not too many changes at once, adhering to the trunk based development we are using, even unit tests were included right off the bat!

The summary is beautifully formatted and at a first glance looks like a really valuable tool for the reviewer to get the big picture of the pull request. But while you do your initial code review and read through the code you will see all of these changes (and need to understand them) yourself, forming your subjective opinion based on your knowledge of the code base, this summary starts to feel pretty hollow and the value is just not there. These PR summaries written by copilot remind me of code smell:

# Function to calculate the total price of items with tax

def calculate_total_price(items, tax_rate):

# Initialize total price to zero

total_price = 0

# Loop through each item in the list of items

for item in items:

# Add the item's price to the running total

total_price += item['price']

Don't do this!

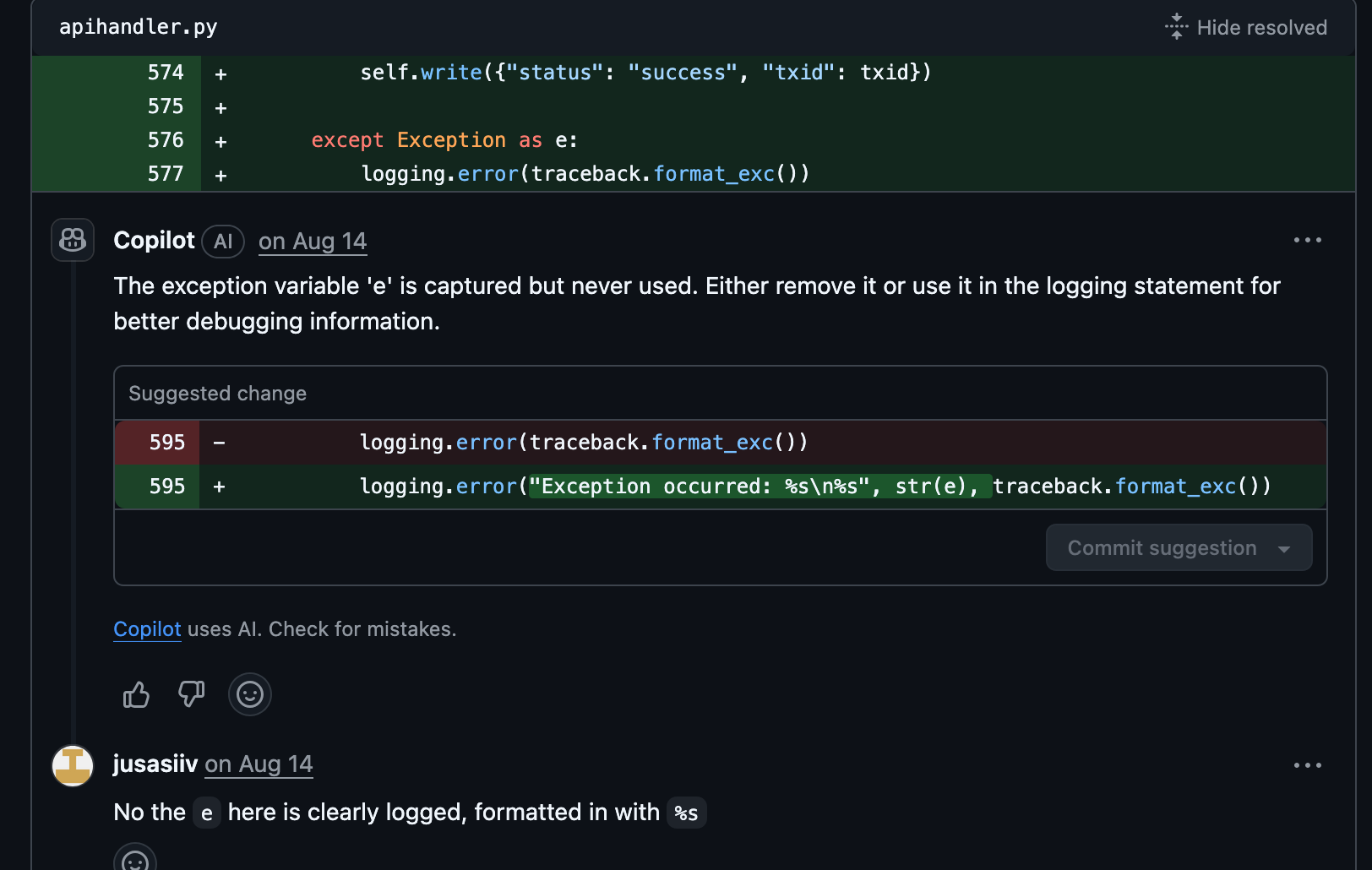

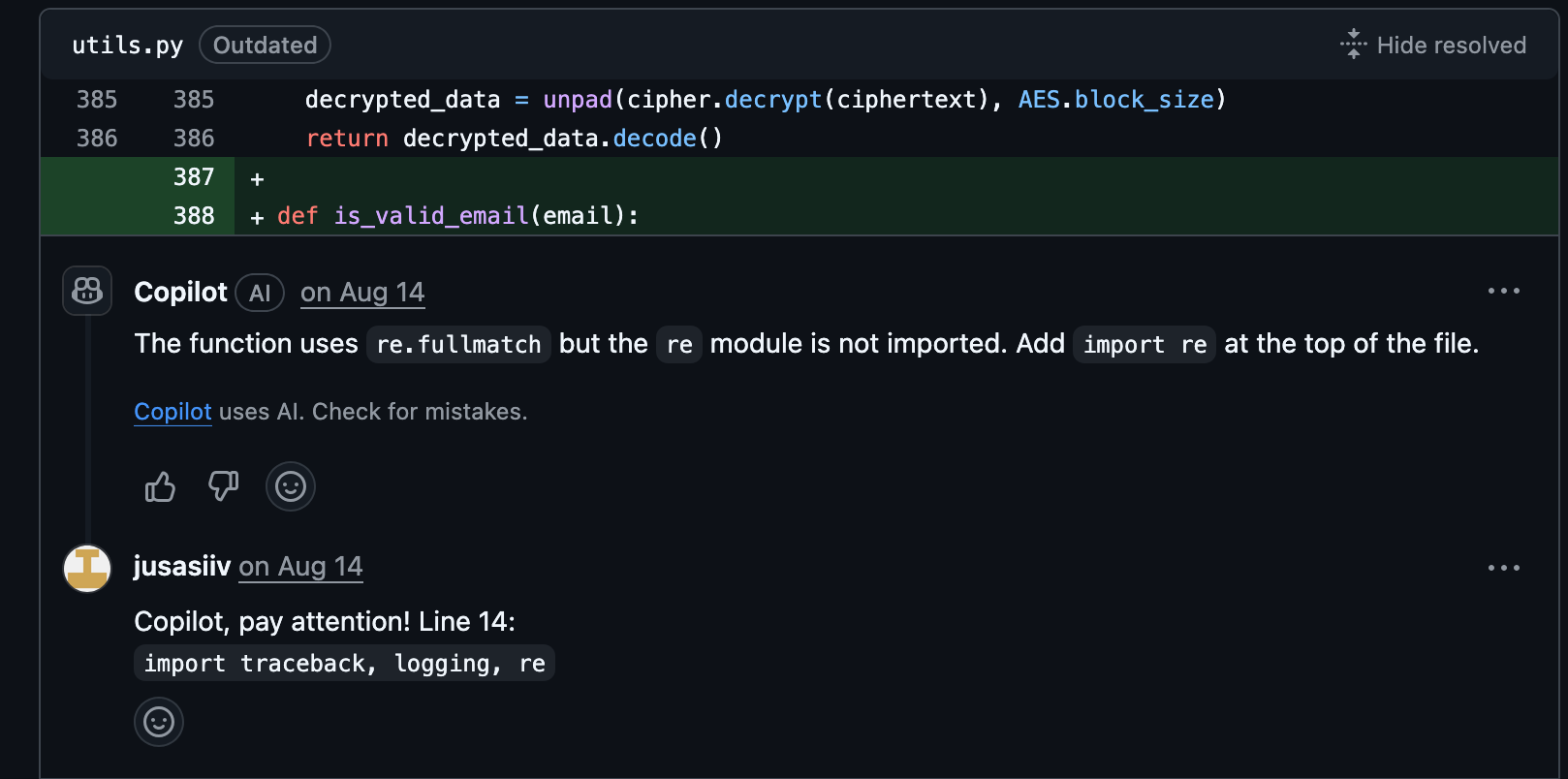

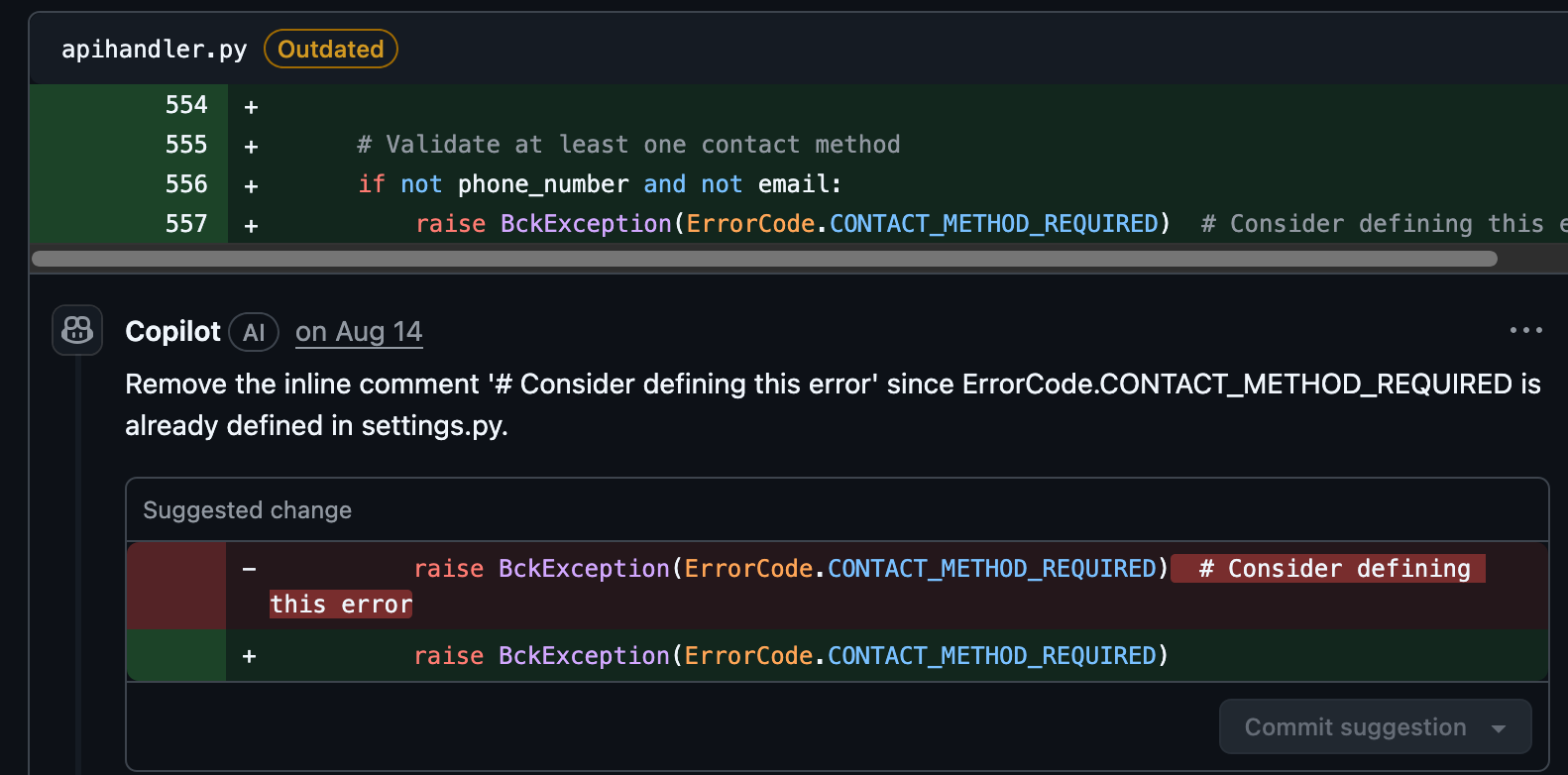

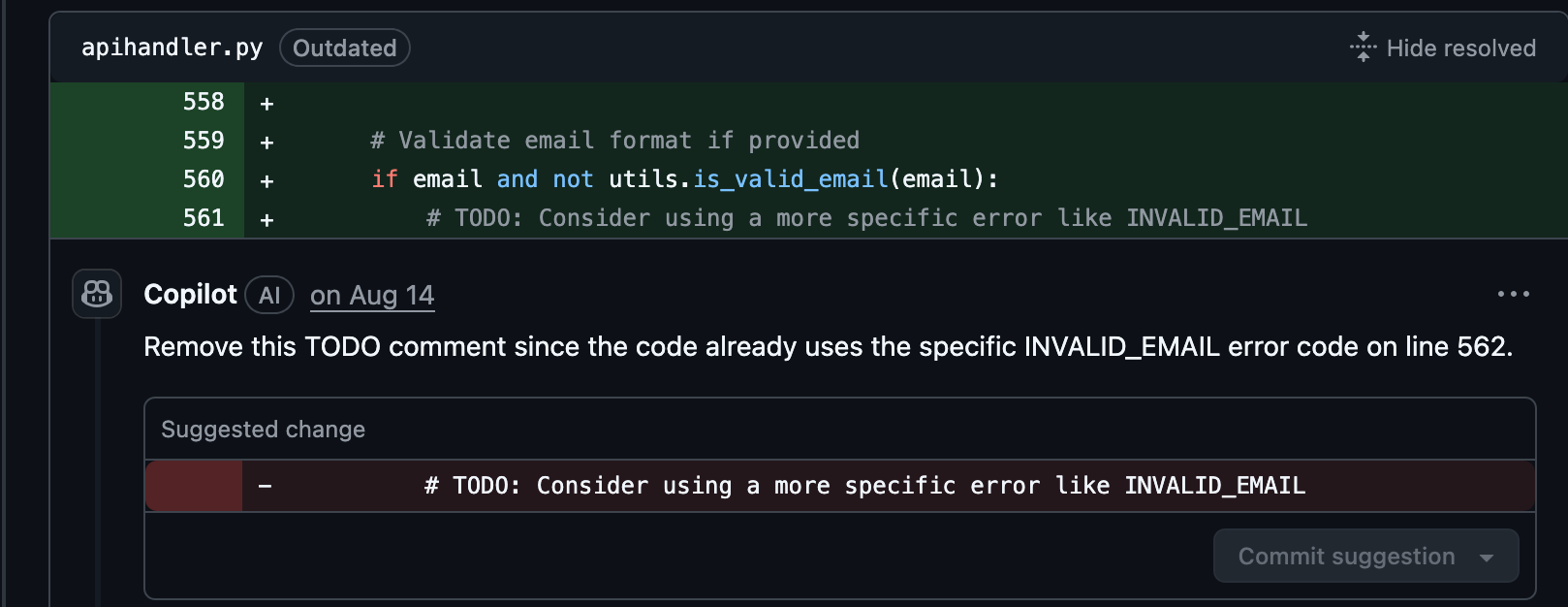

What about the actual code review? Well that was not a great success either, with two comments being clearly just wrong:

And two comments that were nagging about TODOs that should be removed (yes they should be but I dont think we needed all of the electricity wasted on the AI agent for this info):

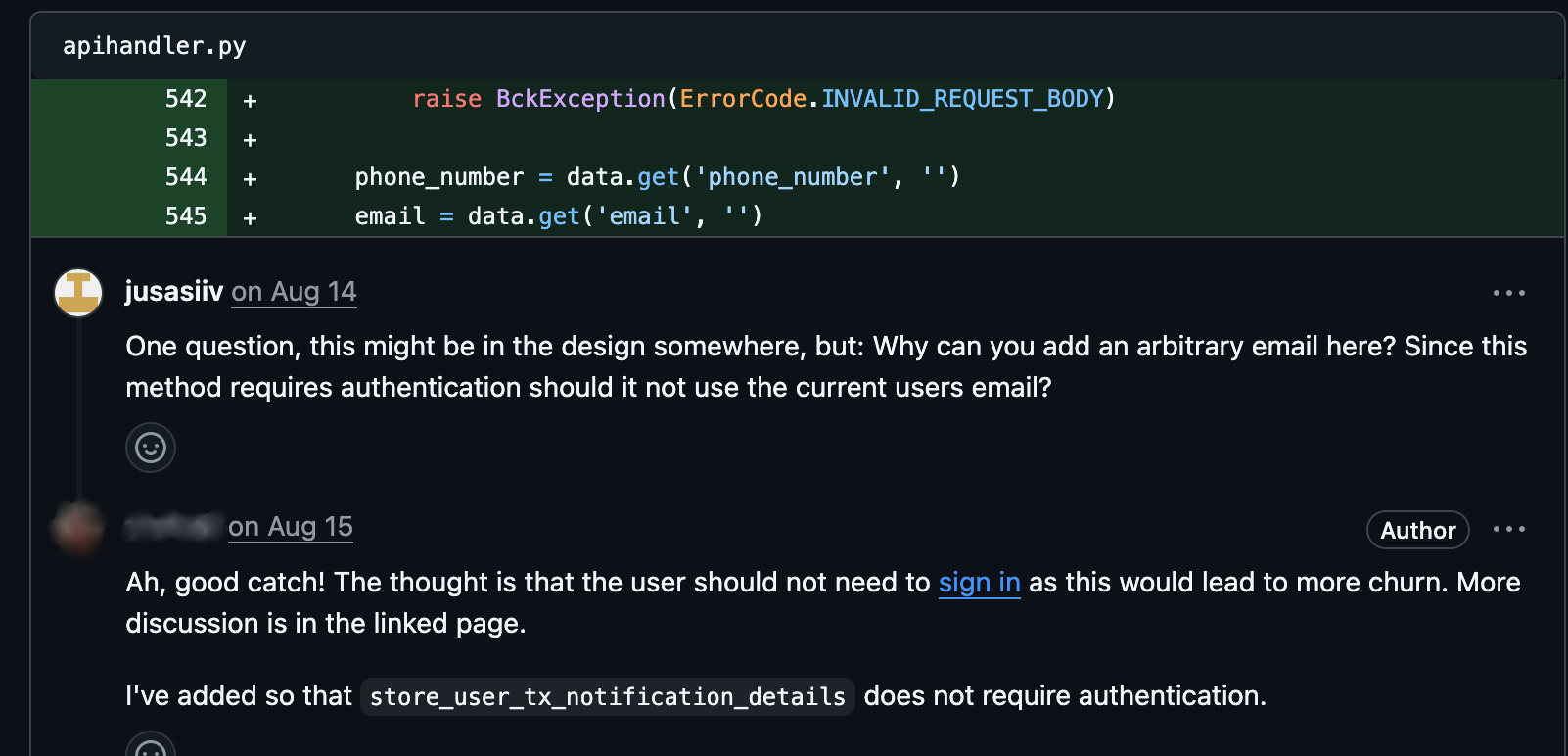

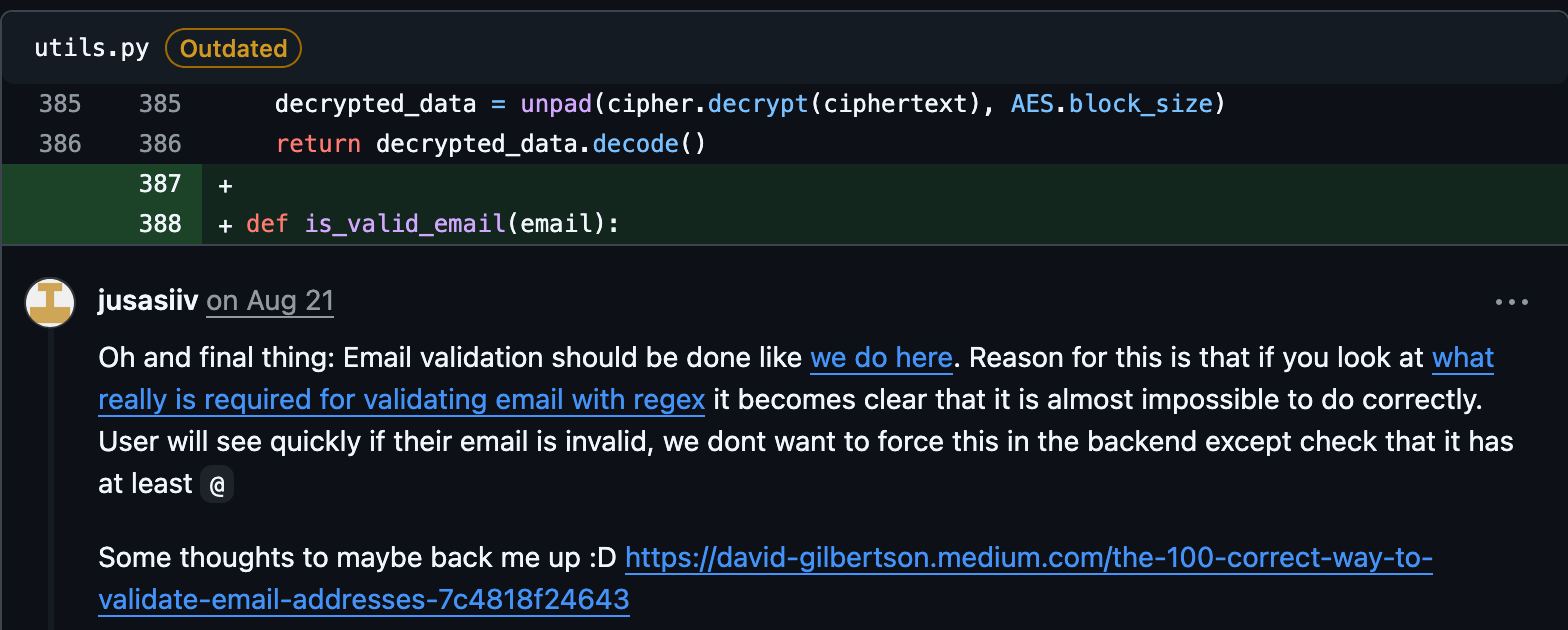

These were my actual code review comments:

Copilot did not suggest these changes since the code was technically correct. Since it did not consider what the actual usage would be it could not perceive that the endpoint should not be authenticated. I also missed the unnecessary auth, but noticing the email parameter we came to the correct conclusion with my coworker. Email validation was something else copilot just took for granted, but since I do know the requirements of our backend I could steer the PR away from unnecessarily complex email validation.

In the end Copilot made me use more time than needed on this PR sending me to do unnecessary checks when it was incorrect in it’s comments. So far this has been the usual experience with Copilot’s code reviews. Our (Blockonomics) code base is about mid size for a payment SaaS (around 20 K LOC for backend) so it is understandable that Copilot can’t take everything into account. Basically for now the PR review functionality for us is useless. With some context management and an instruction file the results could be better but since there is no guarantee and I would never trust just Copilot’s review we will keep on using our human brains for our pull request reviews.