Man vs AI - Building a Slack Bot

Background

We have an automated API testing service build with Bruno which runs functional tests against our production and staging environments. Once done it publishes the results on to a file. This is all fine and dandy but it does not give any feedback to our developers about the test results. Here is where this article comes in. We want a bot to scrape these results and publish them on our Slack channel if any of the tests fail.

I have been curious about the current performance of the AI models that Github Copilot offers so I set myself a challenge: I will first build the bot on my own only using the official Slack documentation, then I will only use Copilot to vibe code the same bot. Finally I will compare the time taken and the quality of the end products (quality is my own subjective perception).

The only preparation I did was to locate the Slack documentation and create the pre-reqs required to actually make the bot push messages to our Slack channels (create a Slack App, requested scopes and added it to our Slack workspace).

Expectations

Since this is a relatively simple task I expected it to take me about an hour to complete. Similarly I expected the AI to do well with this sort of task, the scope is limited and all of the documentation required for this task can be found on the open internet, code examples included.

Requirements of the final product

Here is what the bot should do:

- Read the test results and on test failure post to Slack

- Send a ping to healthcheck.io to make sure the bot keeps on working in the background

- Log events to a file

The Man

Since most of our codebase runs in Python this bot will also be written in Python. I decided to use my usual flow of building something new:

- Read the docs

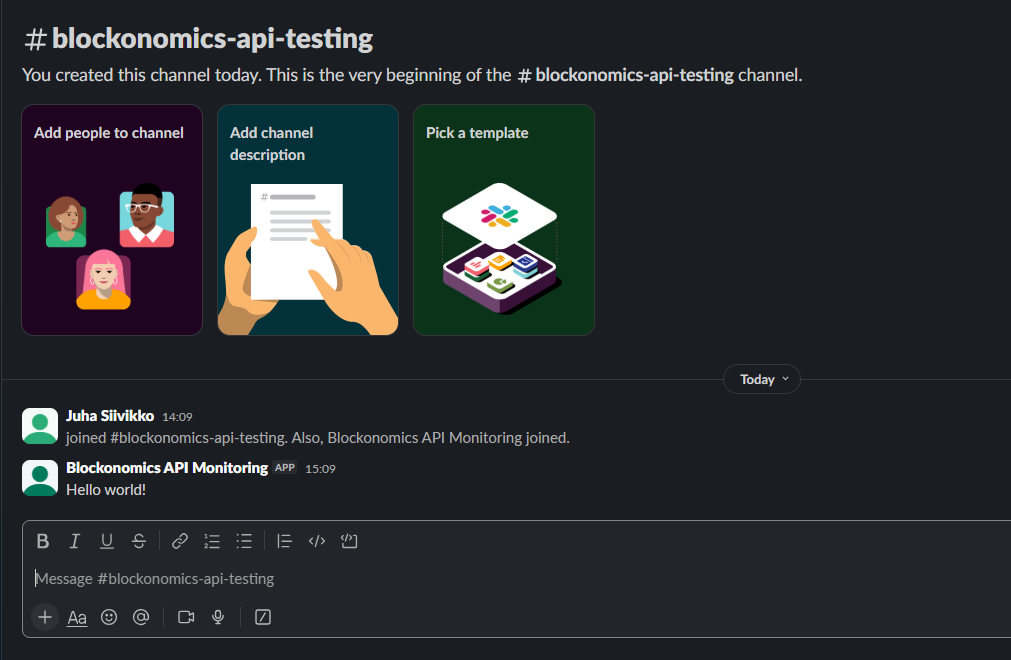

- Build MVP functionality, so in this case I wanted my bot to send a Hello World message to our monitoring channel

- Draw the rest of the owl

The MVP

The Slack documentation has a pretty good example so just by copying it and changing the secrets we get it to run and get the bot posting to Slack:

The rest of the owl

The bot can now post messages but we need the test results if any of the tests fail. When I was coding the Hello World message for the bot I came across sending files with the Slack API. This was a lightbulb moment that I had not considered before💡 My initial plan was to send the report converted to some sort of string, maybe even with fancy formatting. But now that I saw that sending a file is very similar to sending a message I decided that it is much easier just to send the full report itself. At this point I was interested to see what the AI comes up as a solution, the prompt does not mention how to publish the report.

Bruno outputs the results like this when there is a failure (shortened for clarity):

tx_details/tx_details_confirmed (200 OK) - 55 ms

Post-Response Tests

✕ each vin entry has valid structure

expected 4979344 to be below +0

✓ status code is 200

✓ response contains all expected fields

...

📊 Execution Summary

┌───────────────┬──────────────┐

│ Metric │ Result │

├───────────────┼──────────────┤

│ Status │ ✗ FAIL │

├───────────────┼──────────────┤

│ Requests │ 1 (1 Failed) │

├───────────────┼──────────────┤

│ Tests │ 0/0 │

├───────────────┼──────────────┤

│ Assertions │ 0/0 │

├───────────────┼──────────────┤

│ Duration (ms) │ 55 │

└───────────────┴──────────────┘

We can regex for “Status │ ✗ FAIL” with ^Status(.*)FAIL$ and if we find it we know that at least one test has failed. So now we just need the bot to send the file over to Slack.

Lets code it out:

...

def publish_test_results():

try:

response = client.files_upload_v2(channel=CHANNEL_ID, file=TEST_RESULTS_FILE)

assert response["file"]

except SlackApiError as e:

assert e.response["ok"] is False

assert e.response["error"]

print(f"Got an error: {e.response['error']}")

def read_test_results():

with open(TEST_RESULTS_FILE) as file_reader:

for line in file_reader:

if re.search(r"Status.*FAIL", line):

publish_test_results()

...

This does the bare minimum for us. Some glaring issues though before this is production ready:

- We want logging for all of the errors that might happen so that we can later debug any issues that might arise

- We want health checks running for this bot, on successful tests it should send an ALL OK ping

- We don’t want to store configs in the python file so some kind of configuration is required

After iterating the last changes to make the code production ready we have:

import os

import re

import logging

import requests

from pathlib import Path

from slack_sdk import WebClient

from slack_sdk.errors import SlackApiError

from dotenv import load_dotenv

from logging.handlers import RotatingFileHandler

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s',

handlers=[

RotatingFileHandler(

'slack_bot.log',

maxBytes=1024*1024, # 1MB

backupCount=5

),

logging.StreamHandler()

]

)

logger = logging.getLogger(__name__)

"""Load environment variables from a .env file if present."""

load_dotenv()

TEST_RESULTS_FILE = "test_results.txt"

FAIL_PATTERN = r"Status.*FAIL"

SLACK_BOT_TOKEN = os.getenv("SLACK_BOT_TOKEN")

CHANNEL_ID = os.getenv("SLACK_CHANNEL_ID")

HEALTHCHECK_URL = os.getenv("HEALTHCHECK_URL")

if not SLACK_BOT_TOKEN:

raise ValueError("SLACK_BOT_TOKEN environment variable is required")

if not CHANNEL_ID:

raise ValueError("CHANNEL_ID environment variable is required")

"""Initialize Slack client."""

client = WebClient(token=SLACK_BOT_TOKEN)

def publish_test_results():

try:

client.files_upload_v2(

channel=CHANNEL_ID,

file=TEST_RESULTS_FILE,

title="Test Results - FAILED"

)

logger.info(f"Successfully uploaded test results to Slack")

except SlackApiError as e:

logger.error(f"Failed to upload file: {e.response['error']}")

def read_test_results():

if not Path(TEST_RESULTS_FILE).exists():

logger.warning(f"Test results file not found: {TEST_RESULTS_FILE}")

return

with open(TEST_RESULTS_FILE) as file_reader:

content = file_reader.read()

if re.search(FAIL_PATTERN, content):

logger.info("Test failures detected, publishing to Slack")

publish_test_results()

else:

logger.info("All tests passed...")

if HEALTHCHECK_URL:

try:

requests.get(HEALTHCHECK_URL, timeout=10)

logger.info("Healthcheck ping sent")

except requests.RequestException as e:

logger.error(f"Failed to send healthcheck ping: {e}")

if __name__ == "__main__":

read_test_results()

Cool, pretty happy with that. There are even more improvements we could make but now we are approaching diminishing returns. Do we really need to separate concerns and move the Slack API to a class of itself, do we need to add docstrings, do we need unit tests? Probably not, our bot does what it needs to and if it ever expand the we will consider us with these problems.

The total time spent here came right around an hour. Most of the time was spent on iterating the MVP version to fulfil all requirements for a production stable code. Additional time went into reconfiguring the Slack app with new permissions for file handling. As I was already familiar with pretty much everything else but the Slack API the work was mostly using the patterns that I had used many times before.

Now lets see what the AI comes up with 🤖

The AI

The AI overlords were represented by Claude Opus 4.5. Since I am familiar with working with Claude Sonnet 4.5 the decision was between Sonnet 4.5 and Opus 4.5. In the end I chose Opus as it is said to perform better in multistep, complex assignments in a more agentic way.

To keep the starting point equal the first prompt was defined before I started my own version of the bot.

Prompt 1

“Create a Slack bot that sends the results of these tests to our Slack channel in case of any of the tests failing. The bot should be written in python and should run in an Ubuntu server. The bot should use Slack SDK. I have attached one failing and one passing test result file as an example.”

Opus went right into work and work it did indeed! Producing 278 LOC for the bot and a full readme to setup the Slack app, install the bot, configure CI and even troubleshooting instructions. The full result can be found here. Lets review.

The code parses the test result files using these regex patterns:

...

# Extract test name (first line usually contains it)

lines = content.strip().split('\\n')

if lines:

first_line = lines[0]

# Extract test name before the status code

match = re.match(r'^(.+?)\\s*\\(', first_line)

if match:

results['test_name'] = match.group(1).strip()

# Parse passed tests (✓)

passed_pattern = r'✓\\s+(.+)'

results['passed_tests'] = re.findall(passed_pattern, content)

# Parse failed tests (✕)

failed_pattern = r'✕\\s+(.+?)(?:\\n\\s+expected.+)?'

failed_matches = re.finditer(r'✕\\s+(.+)', content)

...

# Extract duration

duration_match = re.search(r'Duration \\(ms\\)\\s*│\\s*(\\d+)', content)

if duration_match:

results['duration_ms'] = int(duration_match.group(1))

# Extract request counts

requests_match = re.search(r'Requests\\s*│\\s*(\\d+)\\s*\\((\\d+)\\s*(Failed|Passed)\\)', content)

if requests_match:

results['total_requests'] = int(requests_match.group(1))

count = int(requests_match.group(2))

if requests_match.group(3) == 'Failed':

results['failed_requests'] = count

return results

I am worried about the complexity of this code. First the regexes are convoluted, at least I cannot decipher what they mean without spending at least 10 mins with regexer. Secondly this will immediately break when the output from Bruno changes (which it did on the latest 3.0 release!). So this is not very maintainable. But no worries, lest ask Opus to make the code more straight forward!

Prompt 2

“I am worried this code is not easy to maintain. The regex is too complex and if the output of the test results change the code will break. Can you make it more simple and less prone to break if the test result format changes slightly. I do not care so much about the fancy result print out.”

As always the AI is eager to please:

You're right - the regex parsing is fragile. Let me simplify it to just detect failures and send the raw output to Slack. The bot went from ~180 lines to ~70 lines, and will keep working even if the test output format changes - as long as failures contain one of the indicator keywords.

Lets review, and again here is the full code. Now the failure detection is based on keywords existing in the test result:

# Keywords that indicate test failure - add more as needed

FAILURE_INDICATORS = ['FAIL', '✕', 'failed', 'error', 'Error', 'FAILED']

def has_failures(content: str) -> bool:

"""

Check if the test output contains any failure indicators.

Simple string matching - no regex needed.

"""

return any(indicator in content for indicator in FAILURE_INDICATORS)

This is OK I guess, but since any of the listed words trigger the failure report there is a chance of false positives. Any of the words alone could exist in a report that is passing.

This time Opus decides to send the full report although taking account the slack 3000 char limit:

def send_slack_notification(token: str, channel: str, file_path: str, content: str) -> bool:

"""

Send test results to a Slack channel.

Just sends the raw output - no fancy formatting.

"""

client = WebClient(token=token)

# Truncate if too long for Slack (max ~3000 chars for code block)

max_length = 2900

if len(content) > max_length:

content = content[:max_length] + "\\n... (truncated)"

message = f"🔴 *Test Failure Detected*\\n\\n```{content}```"

...

This is an issue if the failing API is below the truncated lines, the devs will never know what the actual failure was. Also the readme has now become outdated.

Prompt 3

“This is a lot better but can you figure out how to send the whole test result. If the failing API is below the truncated line there is no way to know which test failed. Also the README is now outdated”

Now I'll update the Python code to upload the file instead of truncating, and update the README

So we end up to a solution much similar to what I wrote. This might be due to the follow up prompts being influenced by my design decisions earlier, but I tried not to hint about the actual implementation the AI should use.

Now we just need logs and health checks and we are done.

Prompt 4

“Perfect. Can you add logging to a file. Also if no failures are detected send a ping to healthchecks.io”

Here we have the final full code:

#!/usr/bin/env python3

"""

Slack Test Notifier Bot

Sends test results to a Slack channel when tests fail.

"""

import os

import sys

import logging

import urllib.request

import urllib.error

from slack_sdk import WebClient

from slack_sdk.errors import SlackApiError

# Configure logging

LOG_FILE = os.environ.get('LOG_FILE', '/var/log/slack_test_notifier.log')

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s',

handlers=[

logging.FileHandler(LOG_FILE),

logging.StreamHandler()

]

)

logger = logging.getLogger(__name__)

# Keywords that indicate test failure - add more as needed

FAILURE_INDICATORS = ['FAIL', '✕', 'failed', 'error', 'Error', 'FAILED']

def read_test_results(file_path: str) -> str:

"""Read test results from a file."""

with open(file_path, 'r', encoding='utf-8') as f:

return f.read()

def has_failures(content: str) -> bool:

"""

Check if the test output contains any failure indicators.

Simple string matching - no regex needed.

"""

return any(indicator in content for indicator in FAILURE_INDICATORS)

def ping_healthcheck(url: str) -> bool:

"""

Ping healthchecks.io to indicate successful test run.

"""

if not url:

return True

try:

req = urllib.request.Request(url, method='GET')

with urllib.request.urlopen(req, timeout=10) as response:

logger.info(f"Healthcheck ping sent successfully: {response.status}")

return True

except urllib.error.URLError as e:

logger.error(f"Failed to ping healthcheck: {e}")

return False

def send_slack_notification(token: str, channel: str, file_path: str, content: str) -> bool:

"""

Send test results to a Slack channel.

Uploads the full results as a text file snippet.

"""

client = WebClient(token=token)

try:

# Upload the full content as a file snippet - no truncation

client.files_upload_v2(

channel=channel,

content=content,

filename=os.path.basename(file_path),

title="🔴 Test Failure Detected",

initial_comment="Test failures detected. See full results below:"

)

logger.info(f"Results uploaded to {channel}")

return True

except SlackApiError as e:

logger.error(f"Failed to send to Slack: {e.response['error']}")

return False

def main():

"""Main entry point."""

# Configuration from environment variables

slack_token = os.environ.get('SLACK_BOT_TOKEN')

slack_channel = os.environ.get('SLACK_CHANNEL', '#test-results')

healthcheck_url = os.environ.get('HEALTHCHECK_URL') # e.g., <https://hc-ping.com/your-uuid>

if not slack_token:

logger.error("SLACK_BOT_TOKEN environment variable is required")

sys.exit(1)

if len(sys.argv) < 2:

logger.error("Usage: python slack_test_notifier.py <test_results_file>")

sys.exit(1)

test_file = sys.argv[1]

if not os.path.exists(test_file):

logger.error(f"File not found: {test_file}")

sys.exit(1)

logger.info(f"Checking test results: {test_file}")

content = read_test_results(test_file)

if has_failures(content):

logger.warning("Failures detected, sending to Slack...")

success = send_slack_notification(slack_token, slack_channel, test_file, content)

sys.exit(0 if success else 1)

else:

logger.info("All tests passed. No notification sent.")

ping_healthcheck(healthcheck_url)

sys.exit(0)

if __name__ == '__main__':

main()

And the final full repo can be found here.

The final result is pretty much the same as what I wrote by myself. I don’t think I influenced the design in the prompts too much but at this point it is impossible for me to tell.

The readme is now outdated again. I tried to get it updated but I got this message:

Sorry, the upstream model provider is currently experiencing high demand. Please try again later or consider switching to GPT-4.1.

It would have been very annoying to get this earlier during the vibe coding session...

Results

What the AI did well:

- Code actually does what it was required

- Code is following best practises, for example:

- Type hints

has_failures(content: str) -> bool: - Functions terminate nicely, i.e. returning something or

sys.exit(0) - Docstrings are included (although these have code smell with over explanation on unnecessary commenting)

- Type hints

- Really good readme with instructions on how to setup the Slack app, how to run the code, how to troubleshoot and how to make it run in a CI environment

- Printed messages, logs, Slack messages all are nicely formatted

Some issues:

- Multiple prompts were required

- The end product differed from the initial code by a lot (first version had over 2x the LOC)

- Code feels bloated even after re-prompts

- My code has 30 % lower LOC for the same functionality (docstrings removed for calculations)

- Functions carry lots of arguments

send_slack_notification(slack_token, slack_channel, test_file, content) - Unused arguments:

file_pathinsend_slack_notification()

- Test failure detection is iffy, will probably cause false positives

Conclusion

With one or two more prompts I would probably have arrived to a solution that I would have been 95 % happy with. Unfortunately the model provider was too busy to serve me. Not taking that into account Claude Opus did a relatively good job.

If we compare the time taken for this project, it took me around an hour to complete the bot where at least 20 minutes was reading the Slack SDK documentation and interfering with the app settings. With the AI I was done in 25 minutes after four prompts which included testing the code and doing a code review on each iteration. The biggest time save from the AI came definitely from not needing to read documentation, Opus even gave the full guide on how to setup the Slack app. I did not do the updated app configurations for the AI code so I would estimate the total time spent with Opus to complete the project would have been about half an hour.

The end product

After the first prompt the bot did exactly what it was asked to, but the code would not have passed our internal code reviews in any way or shape. I had to know what the issues in the code were and what kind of improvements it needed. Without this the half baked code would not have worked properly (truncated report, crazy regex, breaking easily).

The lessons

By writing the bot myself I got to know the Slack SDK and got some ideas for future projects. Also I know exactly what the code does and what the limitations are. I cannot say that I am 100 % confident on knowing the AI code, I am an observer of the code.

If I wrote the whole bot with AI there would have been zero learning. For this code I used stuff that I am familiar with from previous projects (logging, health checks, configs). Those tools I have studied and used in different scenarios in different ways. If I relied only on AI I would not have the Slack SDK in my arsenal for future projects.

The AI did what I wanted but I knew what I wanted and I mostly knew how to get there. This is because I have spent time writing code and reading the docs myself. AI can save time but there is no personal development involved.